Billion-dollar tech company representatives, academics, media practitioners, members of civil and polite society, and a whole lot of other people, have been called upon to give oral evidence at the Select Committee on Deliberate Online Falsehoods.

Scheduled to stretch over eight days in March 2018, five days of hearings have so far been completed.

Reams of transcripts have been produced with statements touching on a variety of topics that went in many different directions.

So, for the lay Singaporean: What is the point of all this talking and what is it supposed to achieve?

More regulation, right?

For people who have been following the proceedings and are familiar with the way Singapore operates, the outcome is apparently already fairly obvious.

If bookmakers were taking bets for either more regulation or no regulation, we can safely say that betting has been prematurely called off.

Because to go to all these bother with public hearings -- flying in experts, taking people away from their busy schedules to talk -- the Singapore government has positioned itself as being very willing to take the lead in something, which in this case, is to tackle what it considers a real national problem today.

[related_story]

Example of a content being targeted

Let's be very clear though: No one at this point in time, when hearings have not completed, can tell what kind of new regulations, if any, will come out of this exercise.

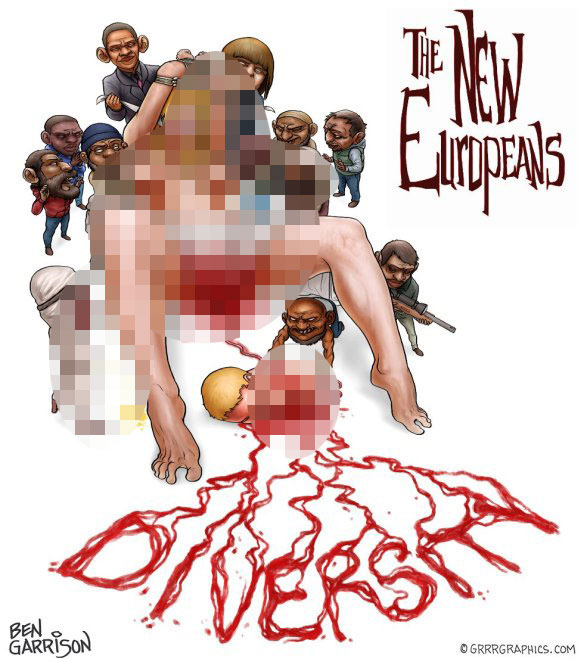

But on the fifth day of public hearings on March 23, a glimpse of the kind of content that is targeted for take-downs, emerged.

Towards the tail-end of the session where Mothership.sg managing director Lien We King, and deputy and managing editor Martino Tan, were giving their statements, Marine Parade GRC MP Edwin Tong spent 10 minutes building up a thorough case, citing a piece of content that ought to be taken down, if it was aimed at Singapore.

An uncensored screen shot of the content was shown in the hearing (picture above), and it was of a graphic anti-immigration drawing that originated in Europe and disseminated primarily via Twitter.

Clicking on this link will take you to the original uncensored image

Clicking on this link will take you to the original uncensored image

It showed caricatures of refugees violating a woman of Caucasian descent and engaging in infanticide -- bleeding into the word "Diversity".

In the lead-up to this image being shown, Tong said:

We have been told by several witnesses, there has been a prevalence of disinformation campaigns throughout the world. It is essentially seeking to threaten to undermine national sovereignty and national security. It is also part of a military arsenal in some cases. Sometimes it takes place even in the absence of open conflict. It is also an attractive option because the costs and manpower requirements of doing it is relatively low and we've heard evidence on how bot farms are created, clickbaits are created.

They are highly effective, they also reduce the need to deploy military power, if the agenda is to undermine national security, national identity -- then deploying this is low cost, low manpower, and you don't even need to deploy military might. It carries a lower cost of detection where it can be subtle, obscured. People who use it, of course, in this area of national interest, could be state actors. But we've also been told that non-state actors also use it and sometimes it is used by state actors but shared by non-state actors domestically.

The message was that the Singapore government would be compelled to act if this sort of content was created and disseminated to target Singapore, given its purpose of sowing discord and widening fault lines in a multi-ethnic society.

It this instance, the tech company that enables the hosting and sharing of this image can be asked or made to take it down for national security reasons.

It was revealed that the image remained in circulation in Europe.

Implications

There are three things that can be said.

Firstly, the main thrust of these hearings so far has been that there is no defence for deliberate online falsehoods, also repeatedly referred to as "DOFs", in any sovereign country with a functioning society.

This is something that is hard to argue against or even play devil's advocate to.

Secondly, based on the direction of the hearings thus far, it appears that the focus has shifted beyond looking at just mainstream and online media as content distribution channels, but to tech companies, which can no longer claim to be merely platforms to host content created and shared by individuals.

Tech companies, which are situated thousands of kilometres away from Singapore, are increasingly characterised as enablers.

More importantly, the hearings showed that the tech companies do not want or are unable to self-regulate on issues that exacerbate the fault lines of complex multi-religious and multi-racial democratic societies.

Thirdly, due to the sheer number of stakeholders involved, there is a chance different regulations of varying impact could be introduced to deal with different violations.

And even though there is responsibility on the part of various stakeholders to stick to putting out truths, the difficulty is in deciding who can be the ultimate arbiter of what is true or false -- the degree of truthiness.

How to assess content high on shock value?

Coming back to the image above as a prime example: It is definitely high in shock value and would offend religious and racial sensibilities.

But will it fulfill the strict criteria of an image that is i) created with malicious intent and ii) a falsehood and not satire?

In other words, is the government able to demand the social media platforms like Facebook, Twitter and Google to immediately delete such an image? With or without debate and/ or opposition?

For immediate take-downs to be a way of life, it would appear that the government wants such a “nuclear option” to demand an immediate take-down of content.

But will non-state actors be able to use such a law? Will it become a situation of mutual assured destruction, when too many actors are given such abilities?

Three more days of hearings to go.

We shall see.

If you like what you read, follow us on Facebook, Instagram, Twitter and Telegram to get the latest updates.