Follow us on Telegram for the latest updates: https://t.me/mothershipsg

Meta has generated the first artificial intelligence to translate Hokkien, which is a language primarily spoken and not written.

The company, formerly known as Facebook, is training an artificial intelligence to translate hundreds of languages in real time, and has been developing its Universal Speech Translator.The Universal Speech Translator is a machine language model that will eventually allow for the real-time translation of numerous different spoken and written languages, allowing anyone to communicate easily.

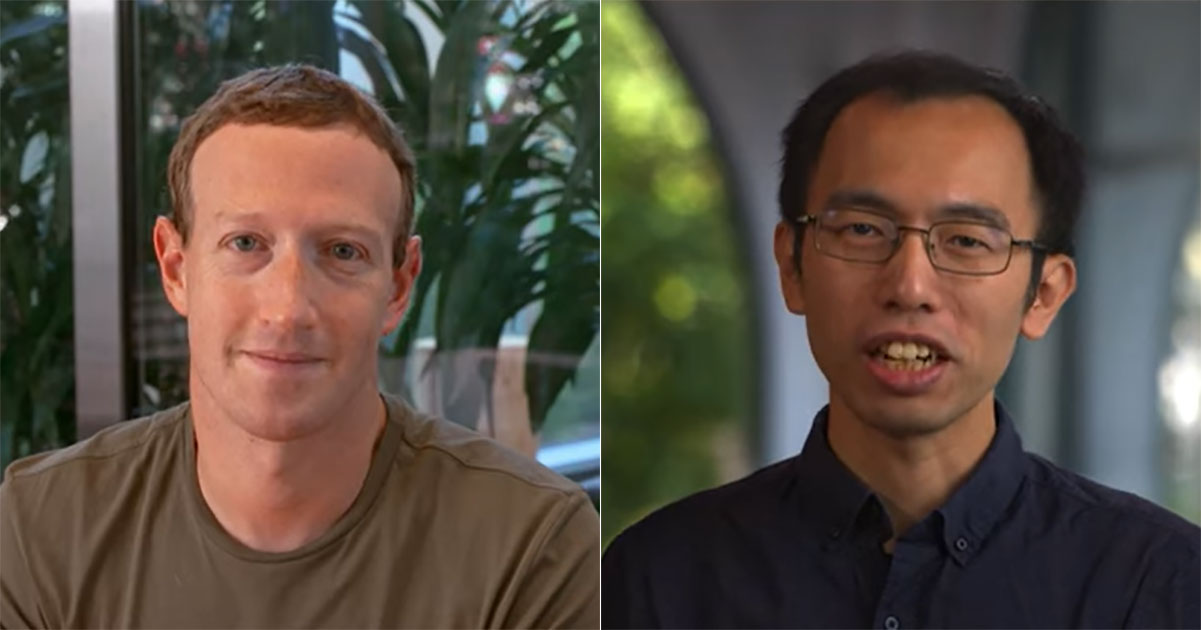

Working on the project is Peng-Jen Chen, a Meta AI researcher, who grew up in Taiwan speaking Mandarin Chinese, but his father spoke Taiwanese Hokkien, which gives this project a personal angle.

We’re developing AI for real-time speech translation to break down language barriers in the physical world and in the metaverse.https://t.co/PC0BzLCHAr pic.twitter.com/wW8NlyndmM

— Meta Newsroom (@MetaNewsroom) October 19, 2022

Why is Hokkien difficult to translate?

Hokkien is a language that is spoken by approximately 49 million people in southeastern mainland China and Taiwan, and within the Chinese diaspora in Singapore, Indonesia, Malaysia, the Philippines and other parts of Southeast Asia.

According to Meta, Hokkien, which is considered a dialect in Singapore, is one of the 40 per cent of 7,000 living languages that are primarily spoken.

This means that Hokkien does not have a large enough dataset to train the artificial intelligence since the language does not have a unified, standard writing system.

Which was also why Meta focused on a speech-to-speech approach.

Meta's results so far, although still a work in progress, appear to be a breakthrough in speech translation, especially one that works in real-time.

How is translation achieved

How it is done was also described by the company.

It involves computers and people to ensure the sophistication and precision of translations.

The researchers had to first find an intermediate language to bridge Hokkien and English.

So, they used Mandarin, because it is close to Hokkien, to help build the initial model.

“Our team first translated English or Hokkien speech to Mandarin text, and then translated it to Hokkien or English — both with human annotators and automatically,” said Meta researcher Juan Pino.

“They then added the paired sentences to the data used to train the AI model.”

The researchers also actively worked with native Hokkien speakers to make certain that the AI translation models were accurate.

Meta also explained, more technically, that the input speech was translated into a sequence of acoustic sounds, which was then used to create waveforms of the language.

Those waveforms were then coupled with Mandarin.

Open to further development, testing

The researchers will be making their model, code and benchmark data freely available for others to build their own AI real-time translation capabilities.

Currently, the translator can translate only one sentence at a time, with the eventual goal of simultaneous translation.

The demo version of the translator is open to the public here.

Top photos via Mark Zuckerberg

If you like what you read, follow us on Facebook, Instagram, Twitter and Telegram to get the latest updates.