Harmful online content most seen on Facebook & Instagram: MDDI survey

The poll found that two-thirds of respondents encountered harmful content on the six designated social media platforms.

Most users of social media will encounter some form of harmful content, as indicated by the results of a Ministry of Digital Development and Information (MDDI) survey.

The annual Online Safety Poll, conducted in April 2024, involved 2,098 Singaporeans aged 15 years old and above.

Results found that 74 per cent of respondents met with harmful content online this year.

This is a marked increase from 65 per cent in 2023.

Content deemed harmful by MDDI most often involves cyberbullying and sexual content, followed by violent content and those inciting racial or religious tension.

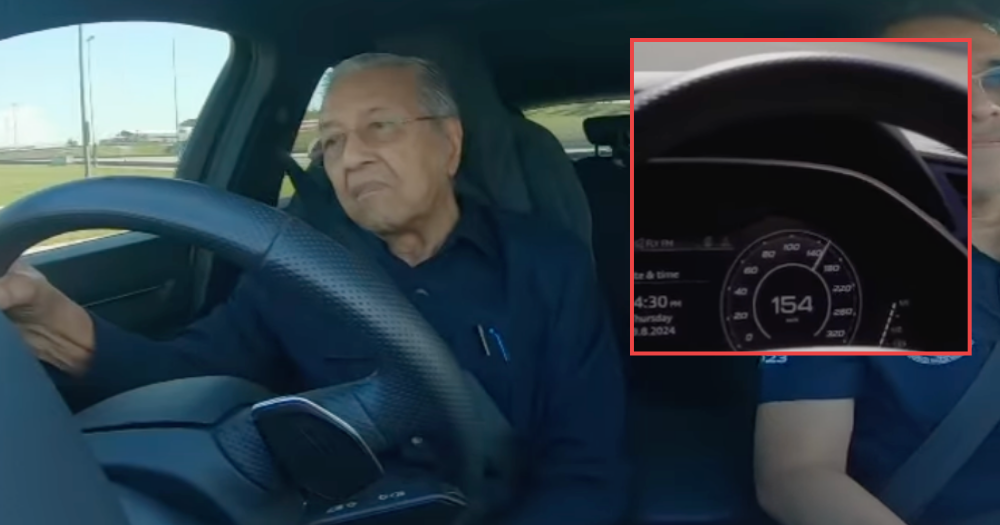

Prevalent on social media

66 per cent of respondents saw harmful content on the six social media services designated by the Infocomm Media Development Authority (IMDA) under the Code of Practice for Online Safety, which was more than the 57 per cent who did so in 2023.

Among these people, about 60 per cent cited encounters on Facebook, while 45 per cent cited encounters on Instagram — making these two platforms home to the highest number of such encounters.

TikTok, YouTube, X, and HardwareZone followed.

Image via MDDI

Image via MDDI

Rise in content inciting race and religious tensions

Of the various forms of harmful content found on social media platforms, cyberbullying (45 per cent) and sexual content (45 per cent) remained the most common.

There was also a notable increase in content inciting racial or religious tension, as well as violent content.

These were up by 13 per cent and 19 per cent, respectively, compared to 2023.

Image via MDDI

Image via MDDI

More choose to ignore

Despite the prevalence of such harmful content, the majority of participants who came across it turned a blind eye.

This was because they either didn't see the need to take action, were unconcerned about the issue, or believed that making a report would not make a difference.

35 per cent blocked the offending account or user, and 27 per cent reported it to the platform — but the process wasn't all smooth-sailing.

Of those who reported content, eight in 10 experienced issues with the reporting process.

Such issues included the platform not taking down the harmful content or disabling the responsible account, not giving an update on the outcome, and allowing removed content to be reposted.

Government efforts

The government has made several strides towards shielding the public from online harm.

Since February 2023, as per amendments to the Broadcasting Act, the government has been able to quickly disable access to "egregious" content on designated social media sites.

In July 2023, the Code of Practice for Online Safety took effect.

This requires designated social media sites to minimise children’s exposure to inappropriate content and provide ways to manage their safety.

Minister for Digital Development and Information Josephine Teo also said a new Code of Practice for app stores will help designated app stores better check users' ages.

Beyond the government

While the government works towards combating harmful content online, MDDI indicated that other stakeholders also have a part to play.

Under the Code of Practice for Online Safety, designated social media services need to submit their first online safety compliance reports by end-July 2024.

The Infocomm Media Development Authority (IMDA) will assess their compliance and see if any requirements need to be tightened.

As for people on the internet, MDDI urged them to report any harmful content they come across.

IMDA also has workshops, webinars, and family activities to raise awareness on keeping safe online.

Top image via Zoe Fernandez/Unsplash

MORE STORIES